In high-stakes industrial environments, the precision of measuring tools is the fundamental factor that distinguishes structural integrity from mechanical failure. Whether in aerospace engineering or large-scale construction, a single millimeter of deviation in a tape measure or caliper can lead to catastrophic delays and ballooning operational costs. Ensuring the reliability of these instruments requires a dual-focus engineering approach: Scale Accuracy Calibration to maintain metrological traceability and Drop Impact Resistance Testing to validate mechanical durability. By adhering to international standards such as ISO 17025 and utilizing advanced on-site inspection protocols, stakeholders can guarantee that their tools perform with absolute fidelity throughout their operational life.

Key Takeaways

- Metrological traceability to SI units is the only objective method for verifying scale accuracy.

- Environmental stressors, particularly thermal fluctuations and humidity, can introduce systematic measurement errors.

- Drop impact testing (ISTA 1A) identifies structural vulnerabilities that compromise internal sensor alignment.

- Regular scale accuracy calibration corrections mitigate the effects of mechanical wear and drift.

The Metrology of Scale Accuracy: Precision and Traceability

Calibration is defined as the comparison between a measurement device and a standard of known accuracy. In the metrology of length and weight, this involves establishing a "Traceability Chain" that links the tool's reading back to a primary national or international standard (e.g., NIST in the US). Without this chain, a measurement remains anecdotal rather than scientific. For industrial products, calibration is not a one-time event but a continuous quality cycle.

Measurement Uncertainty and Tolerance

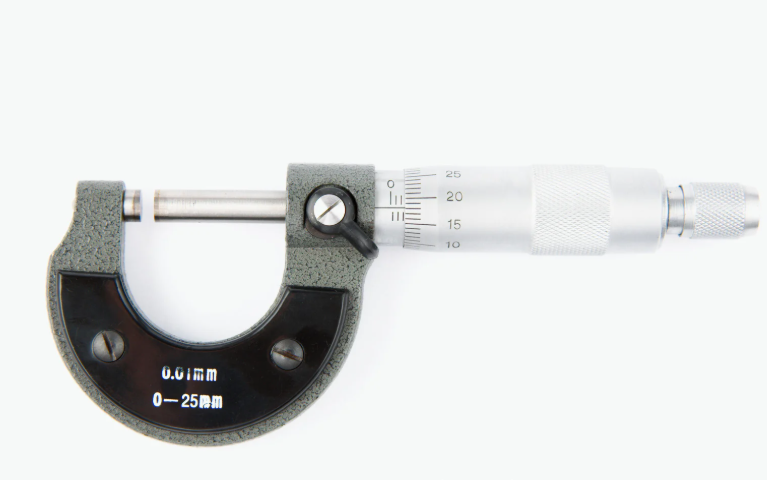

Technicians must differentiate between "accuracy" (the proximity to a true value) and "precision" (the repeatability of the result). The "Uncertainty of Measurement" quantifies the quality of the result, accounting for factors such as the tool's resolution, the operator's influence, and the environmental conditions. If a micrometer has an uncertainty larger than its specified tolerance, it is considered technically non-compliant for high-precision manufacturing.

| Consequence of Error | Technical Impact | Business Outcome |

|---|---|---|

| Compromised Quality | Tolerance stack-up in assemblies | Higher rejection rates and waste |

| Structural Failure | Incorrect load-bearing calculations | Catastrophic safety hazards |

| Regulatory Breach | Non-compliance with ISO 9001/17025 | Fines and loss of certification |

| Operational Drift | Unseen wear in moving parts | Premature equipment breakdown |

Mechanical Durability: Drop Impact Resistance Testing

A precision tool that fails after its first fall is a significant liability in industrial settings. Mechanical strength testing, specifically the Unit Drop Test, simulates real-world hazards. This procedure is critical for digital calipers, laser levels, and weight scales that contain sensitive electronics or fragile glass components.

ISTA 1A and ASTM Test Protocols

Standardized drop testing involves releasing the tool from a height—typically 30 inches (76 cm)—onto a hard surface (concrete or steel). The tool is dropped in ten different orientations: one corner, three edges, and six faces. After the impact, the tool must pass a full recalibration check. Any shift in the "Zero Point" or permanent deformation of the measuring faces results in a failure of the standardized product inspection.

| Material Type | Coefficient of Thermal Expansion | Impact Resistance Level |

|---|---|---|

| Carbon Steel | 11.0 x 10^-6 /'C | High (prone to oxidation) |

| Stainless Steel (304) | 17.3 x 10^-6 /'C | Very High (corrosion resistant) |

| Aluminum Alloy | 23.1 x 10^-6 /'C | Moderate (lightweight but soft) |

| Fiberglass / Carbon Fiber | < 3.0 x 10^-6 /'C | Extreme (thermally stable) |

Environmental Stress and Measurement Integrity

Measuring tools are not static; they respond to their environment. Metals expand with heat, and polymers may swell with humidity. Professional quality assurance requires tools to be calibrated at a reference temperature, usually 20'C (68'F). In harsh industrial environments, temperature fluctuations introduce systematic errors that must be accounted for using mathematical compensation or by using materials with low thermal expansion coefficients, such as Invar or ceramics.

Humidity and dust ingress can also compromise the internal mechanisms of dial indicators or the sensors in electronic levels. During on-site tests, TradeAider inspectors perform rubbing tests for safety labels and verify that battery compartments are properly sealed, ensuring that environmental contaminants do not cause circuit failure over time.

Practical Maintenance for Longevity and Accuracy

Even a tool that passes every factory test requires professional maintenance to prevent degradation. Users and facility managers should follow these technical protocols:

- Preventive Cleaning: Removing particulate matter from measuring faces prevents abrasive wear that creates "flats" on spherical contacts.

- Lubrication Management: Using specialized low-viscosity oils to protect moving parts without attracting dust.

- Zeroing Frequency: Mandatory zeroing of digital instruments before every shift to account for mechanical drift.

- Recalibration Intervals: Standardizing recalibration at least once a year, or immediately following any significant drop event.

By integrating these technical validations—from standardized product quality inspection methods to robust calibration schedules—organizations can ensure that their measuring tools remain reliable assets for years to come.

Frequently Asked Questions (FAQ)

What is the difference between a calibration report and a certificate?

A calibration certificate proves that the test took place and provides a conform/non-conform verdict. A calibration report is a more detailed technical document that includes the actual measurement data, the uncertainty calculations, and the environmental conditions at the time of testing.

Can I use a tool that has failed a drop test if it still looks fine?

No. Visual integrity does not guarantee metrological accuracy. A drop can cause internal stress in the material or misalign the sensor bridge, resulting in "Linear Errors" that only show up at certain points of the tool's range. Recalibration is mandatory after any drop event.

How does NIST traceability benefit global trade?

NIST traceability ensures that a millimeter measured in the US is identical to a millimeter measured in any other country adhering to SI units. This international consistency is vital for the interchangeability of components in global supply chains.

What is the typical sample size for an order of 1,000 tools?

Using the ANSI/ASQ Z1.4 G-II standard, an order of 1,000 units typically requires a sample size of 80 units. This provides a statistically high level of confidence while remaining cost-effective for the buyer.

Does temperature affect digital laser levels?

Yes. Temperature affects the density of the air through which the laser travels and can cause the internal chassis to expand. High-quality laser levels include temperature-compensated sensors (TCS) to adjust the reading automatically between -10'C and +40'C.

Grow your business with TradeAider Service

Click the button below to directly enter the TradeAider Service System. The simple steps from booking and payment to receiving reports are easy to operate.